Parallel Programming

How do we get the most out of the resources we have?

Used to be if you wanted a gaming PC you had to buy an Intel chip. These chips often had less total cores than their AMD counterparts, but each core itself had a faster speed than AMD. If we added up the computing power of each chip without factoring in these variables, AMD would win every time. However, ignoring this variable inevitably leads to the wrong conclusion being reached.

That's because many applications are linear.

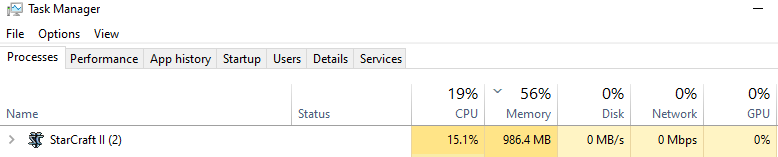

Back when I was playing Starcraft competitively I had a very hard time playing 4v4 games on my older computer. When I did a CONTROL-ALT-DELETE into the Task Manager, what did I find?

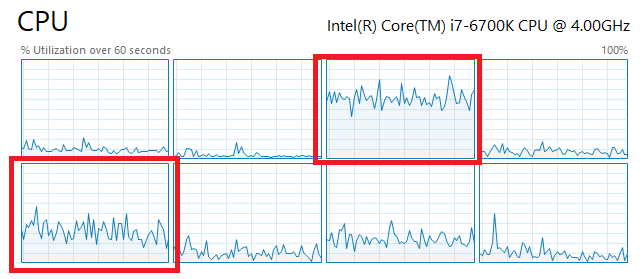

Even though Starcraft was lagging out and running at maximum capacity, most of my CPU wasn't being used, even though CPU power was clearly the bottleneck. Why would this happen?

Because the way starcraft is programmed it basically only uses one single core for the hardest task (keeping track of the location/HP/damage/status of every unit on the map. My CPU still had 6 other cores that weren't being used at all even though I was basically lagging out of the game with so many units on the map. This is because Starcraft (like most applications) is a single-threaded application for the most part.

Threads

The CPU can only do one thing at a time. Using an operating system with threading, the computer tricks us stupid humans into thinking that many things are happening all at once.

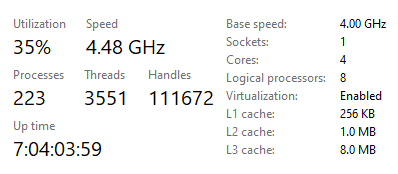

For example, it looks like my computer currently has 3551 threads running... all at the same time. Hot damn, that's a lot of threads. Each one of these threads manages to turn on and off based on if the CPU is currently being allocated to it or not. The CPU bounces back and forth between all of them, making it appear as though they are all running at the same time. They are not, I assure you.

For those of us who were using this tech during the early days, we know all about the Blue Screen of Death and the problems that used to come up when the computer got shut down by losing power instead of properly clicking the button. Windows used to be so so so much worse than it is today. Threading creates so many bugs. So many programs stepping on each other's toes and messing up mutual exclusion (two threads manipulating the same data out of order).

The point here is that parallel programming (using all the cores at once) is often a difficult task, and many developers opt to avoid it entirely to escape the headaches of all the bugs of multiple threads running at the same time and creating all kinds of crazy situations that shouldn't happen.

Remember the EOS ICO? Remember why @dan was so gung ho on that project? The main reason was basically multiple threads running at the same time so the network could scale to infinity (Hive, and most other blockchains, absolutely do not do this). Didn't work out so well, did it? Rather RAM became the bottleneck and EOS is still struggling. I wish them the best in their journey of forking out Block.One and getting rid of their overlords like we did.

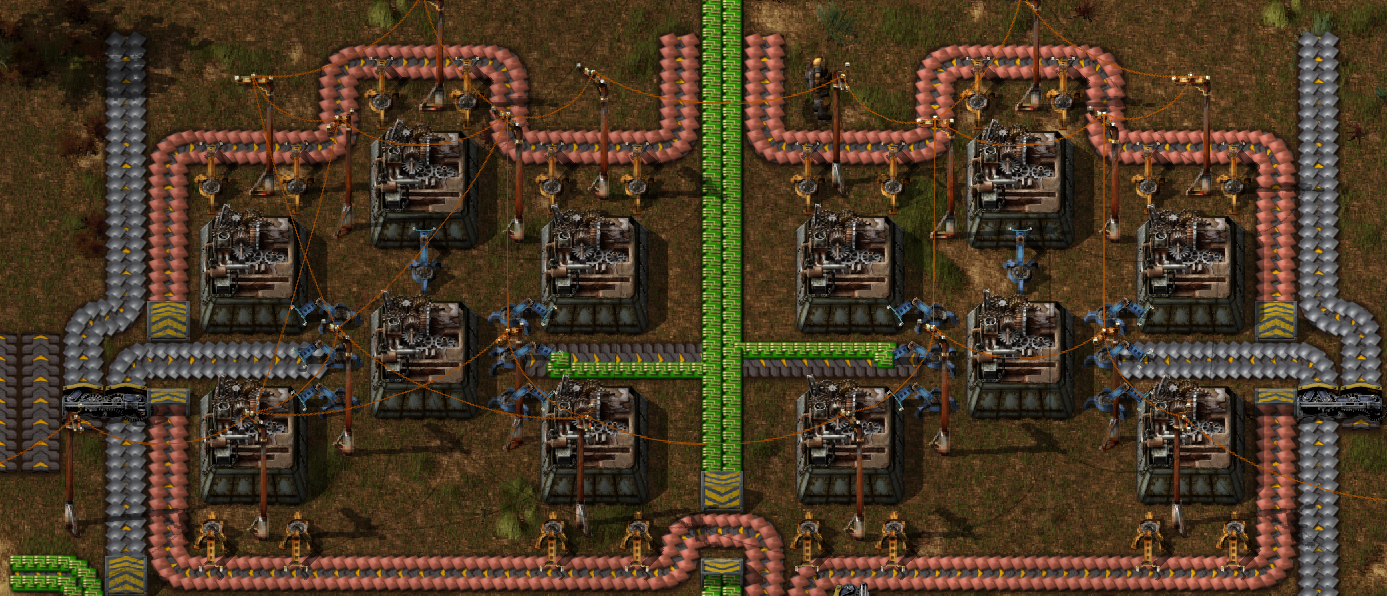

Factorio Magic

The wizards that created this game did a literally unbelievable job at parallel programming. Anyone who's played the game knows this. In fact my old roommate was playing this game on a computer that was over ten years old and could barely run Windows OS, yet this game ran really well even with a million different things happening at the same time. It really is a great example of how powerful using every core can be.

At the same time, anyone who's played multiplayer knows there is a price to be paid. It's very easy to see why online games opt to only use a single thread, because syncing those threads is a nightmare. When joining another person's game I was lucky to even get 300-500 ping (0.3-0.5 second delay between actions). This is wholly unacceptable for most games (especially competitive ones). Luckily, Factorio isn't a competitive game (cooperative sandbox) so this isn't a big deal.

Poker Database Example.

At one point when I was making my own custom database I was also tinkering with threads. The idea was to simulate a million hands of poker as fast as 'humanly' possible. I figured if I split up the work across all cores and then added up the data at the end I'd be fine. The logic was solid but I never actually got it working properly. In the end it wasn't even necessary, and when Full Tilt got banned in the USA (search "black friday poker") I basically lost interest in the project.

The Contact Switch

There is an overhead cost for opening threads, and another overhead cost for closing them and combining the data accurately. If the algorithm we are running depends on the data from the previous set, parallel programming isn't even an option. If threads need information stored on other threads, this complication becomes absurd very quickly and the likelihood that threading is going to help garners massive diminishing returns and huge amounts of complexity and bugs.

However, modern developers are getting better and better at multi-threaded apps as time goes on. Now that the tech has been around for over two decades (multiple cores), the software, infrastructure, and documentation all exist to make parallel processing much less painless than it was 20 years ago. Just imagine how much easier crypto will be in 20 years. We are still very early in the game.

Blockchain is single-threaded by design.

How else could a series of blocks be not a series of blocks? In many respects, blockchain is a step backwards in the world of tech. Very very inefficient and the only benefit is trust and consensus. Turns out trust and consensus are pretty important, and crypto continues to gain exponential value and adoption.

If it aint broke, don't fix it.

Many developers (including myself) attempt to optimize code that doesn't actually need to be optimized. A piece of code could be slow and inefficient as hell, but if modern computers can still give users instant gratification, why would we spend time optimizing this code? This is why scripting languages are so popular (like Javascript and Python). Rather than using a more efficient language, we opt to use one were the code can be cranked out super fast. Then if there is a bottleneck we might opt to rewrite that small part of the program in a faster language like Java or C++ or C or even assembler.

On the flip side of this argument, if a developer gets in too deep on a project it might actually be impossible to multi-thread the function they need because all the infrastructure they built around it is totally incompatible with that solution. Planning is key to development. If a development team has 12 months to deliver a project, it might sound absurd, but that team actually doesn't even need to start coding for three months. The planning phase is so key to avoiding bugs and rewriting huge sections of code that it can literally take up 25% of all the time and effort put into the project.

Flat architecture.

This is a concept that is pinnacle to both crypto and parallel programming. Rather than build up, we are trying to build out. Rather than creating a pyramid of hierarchies, we are spread out on the ground floor trying to build the infrastructure of a new economy that can scale up exponentially better than the system we have today. Rather than one entity taking centralized control, we delegate out to multiple agents working together. Yes, this creates a more complex system, but it's worth it in the correct context.

Pseudo solutions via centralization.

While the layer-one of Hive will never be multi-threaded, we can get the same effect using centralization and second layer tech. For example, Splinterlands taking their operations off-chain is a great example of this. Those operations didn't need to be bloating the chain, so they were removed. If a developer builds on Hive, and only uses Hive for money transfer and account security, we could build thousands of centralized applications on hive and have zero scaling issues.

That's because the bulk of the work is done on centralized servers that only outsource a very small amount of information to the main chain. Centralization is a powerful solution in terms of efficiency and focused direction and progress. Just because something is centralized doesn't automatically mean it is bad. If the trust issues are decentralized but the bulk of the data is centralized, that's actually the ideal solution; the best of both worlds. Take note.

Conclusion

Parallel programing shares many common themes themes with crypto.

If we want to scale up, we better figure it out.

Posted Using LeoFinance Beta

!PGM

BUY AND STAKE THE PGM TO SEND A LOT OF TOKENS!

The tokens that the command sends are: 0.1 PGM-0.1 LVL-2.5 BUDS-0.01 MOTA-0.05 DEC-15 SBT-1 STARBITS-[0.00000001 BTC (SWAP.BTC) only if you have 2500 PGM in stake or more ]

5000 PGM IN STAKE = 2x rewards!

Discord

Support the curation account @ pgm-curator with a delegation 10 HP - 50 HP - 100 HP - 500 HP - 1000 HP

Get potential votes from @ pgm-curator by paying in PGM, here is a guide

I'm a bot, if you want a hand ask @ zottone444

Something good to know today, that's why it requires a lot of resources to return the desired results when we do transactions and data fetching.

But, with this pseudo solution a decentralized network would not remain a secure network, it could create a lot of blackholes. no!

Posted Using LeoFinance Beta

Nice said but what I believe is that everyone are just trying and the ones creating the system are not the one to run in the system so the system can lag or malfunction depending on itself

Posted Using LeoFinance Beta

Note taken. I used to be in the band wagon that hated everything centralized or at least I had to look at it skeptically. Oh well....we learn everyday

The only point of decentralization is to establish trust.

If the trust is already there then decentralization a pointless waste of time and resources.

Do you still play StarCraft? Love that game

Posted using LeoFinance Mobile

It's been a while but I'm easily a Diamond 1 player and I've made it to Master 3 a couple times in 1v1 zerg.

Posted Using LeoFinance Beta

More gates = more warp

Never forget gate proofs supply and resources :)

Proof of gate.

Parallel programming is critical for large scale projects in which speed and accuracy are needed. It is a complex task, but allows developers, researchers, and users to accomplish research and analysis quicker than with a program that can only process one task at a time.

great content!!

Posted Using LeoFinance Beta

If you make a custom blockchain, you can :P Very good idea specialy for server multicore based systems.