For Profit A.I.

A lot of the fears voiced about the future of A.I. are based on concerns about the machines becoming better, smarter, stronger and faster, superior in every way basically, and that they themselves one day decide that we humans are somehow not needed anymore, or worse, seen as an enemy or a parent that needs to die.

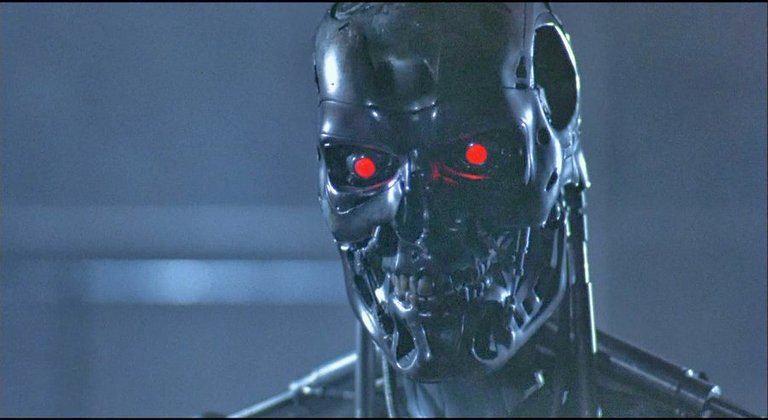

Terminator

Image by BagoGames - source: Flickr

In popular science fiction like the Terminator movies, Blade Runner and the more recent and brilliant (in my opinion) Ex-Machina, this fear of A.I. threatening humans and humanity by simply outsmarting us and us losing control over our own creations is often masterfully depicted. Celebrities like Elon Musk and Stephen Hawking have also voiced similar concerns and the internet is full of articles and blogs about that ever nearing singularity:

The technological singularity (also, simply, the singularity) is the hypothesis that the invention of artificial superintelligence (ASI) will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization. According to this hypothesis, an upgradable intelligent agent (such as a computer running software-based artificial general intelligence) would enter a "runaway reaction" of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful superintelligence that would, qualitatively, far surpass all human intelligence.

source: Wikipedia

These are legitimate concerns, of course, but we're already being manipulated by artificial intelligence in a completely different manner. As so often is the case, nothing external like the runaway robots and computers of popular culture, is the main threat. Instead it is the way artificial intelligence, machine learning in particular, is used by the powerful among us, that's the real scare. And it is already happening. I've already touched on this abuse of "intelligent" algorithms in combination with "Big Data" in my post Surveillance Capitalism, about how popular social media encapsulate us in our own confirmation bubble, and how this polarizes discussions and prohibits consensus. The mere fact that much of the machine learning technology is developed by companies in the business of capturing and selling our data (plus our attention and therefore our time!) to advertisers and others, should raise the hairs on the back of your neck. Because once again we're seeing how everything in our society is made subservient to profits, instead of serving mankind.

The amount of data being collected is enormous; it's save to say that your every move on these social platforms and online stores is being recorded. Machine learning algorithms study our online behaviors to the extent that they can make predictions as to what video we would like to watch next or what product might spark our interest. But this is just the beginning, because these algorithms can also be used to influence our behavior during elections, and have done so already.

New research suggests these messages have striking real-world power. On Election Day 2010, these reminders, when adorned with faces of those who'd clicked "I voted," drove an additional 340,000 voters to polls nationwide, according to research conducted by Facebook and academics, and published today in Nature.

James Fowler, a political scientist at the University of California, San Diego, who led the research says "there were one-third of a million people who actually showed up at the polls who wouldn't otherwise have if the message hadn't been shown." The reason, he says, was straightforward. "Seeing the faces of friends accounted for all this effect on voting. And it affected not only people who saw them, but friends, and friends of friends."

source: MIT Technology Review - September 12, 2012

I think it's very easy to imagine how such power could be, and is abused today. I would like to invite you to watch Zeynep Tufekci's TED Talk about the very real threat to our freedoms these developments imply and why we should all be aware of these dangers now, as we're not talking about some distant future anymore.

We're building a dystopia just to make people click on ads | Zeynep Tufekci

Thanks so much for visiting my blog and reading my posts dear reader, I appreciate that a lot :-) If you like my content, please consider leaving a comment, upvote or resteem. I'll be back here tomorrow and sincerely hope you'll join me. Until then, stay safe, stay healthy!

Recent articles you might be interested in:

| Latest article >>>>>>>>>>> | 404 NFT's |

|---|---|

| Another Year Of Lies | Is Blue Real? |

| 2022 Crypto Forecast | Big Quit |

| Surveillance Capitalism | Cry Africa |

Thanks for stopping by and reading. If you really liked this content, if you disagree (or if you do agree), please leave a comment. Of course, upvotes, follows, resteems are all greatly appreciated, but nothing brings me and you more growth than sharing our ideas.

What if Skynet isn't really the enemy. The enemy is the one who controls Skynet.

Yes, that's what I said: "...it is the way artificial intelligence, machine learning in particular, is used by the powerful among us, that's the real scare." Thanks for stopping by @leprechaun :-)